Bing Webmaster Tools quietly shipped an AI Performance report last week. It shows how often Copilot cites your content as a source when generating answers.

We pulled our data the same day. And it’s probably the most useful thing Microsoft has done for SEO in years.

Not because the numbers are big (though they are — 19,717 Copilot citations across 91 days). But because the report accidentally reveals something about how RAG retrieval actually works. And I don’t think that was the point.

The citation numbers are interesting but not surprising

One page on our site captured 69% of every Copilot citation. The top four pages got 90%. The other 82 pages cited? They split the remaining 10%.

If you’ve been paying attention to AI search, this tracks. AI systems don’t distribute citations the way Google distributes clicks. They pick one or two sources to ground an answer. Being the second-best resource on a topic might mean getting nothing.

So the winner-take-most pattern isn’t news. What’s news is that we can now see it in real data from the platform itself.

The grounding queries are the real story

The report includes something called “grounding queries.” Microsoft presents them alongside citation counts, and at first glance they look like normal search queries.

They’re not.

These are Copilot’s internal retrieval queries — what the system searched for in Bing’s index when it needed a source. Nobody typed “AI search optimization GEO platforms competitor tracking pricing features positioning” into a chat box. That’s a machine-generated query, optimized for Bing’s retrieval system.

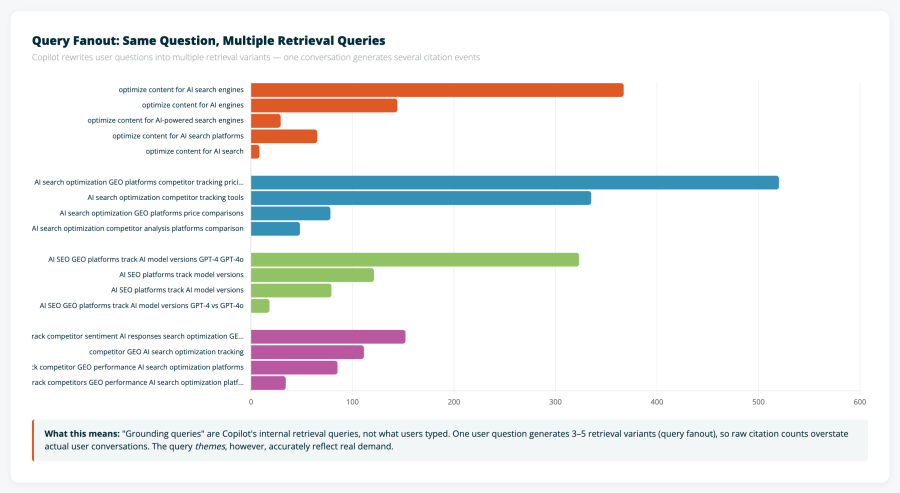

And here’s the thing that caught my eye: for a single user question, Copilot generates multiple retrieval variants.

We found clusters like this in the data:

- “optimize content for AI search engines”

- “optimize content for AI engines”

- “optimize content for AI-powered search engines”

- “optimize content for AI search platforms”

- “optimize content for AI search”

Same intent. Five separate searches. Each one producing a citation event.

So Bing accidentally published a map of how RAG retrieval actually works. The system takes a conversational question, rewrites it into keyword-dense queries, and fans out multiple variants to improve recall. It’s query expansion in real time, and we can see it happening.

What this means (and what it doesn’t)

The query fanout pattern means the raw citation numbers are inflated. Our 19,717 citations probably represent 4,000-6,000 actual user conversations. Good to know before you put those numbers in a slide deck.

But the query themes are gold. 400+ unique retrieval queries show exactly what people are asking AI about our topic area. And they look nothing like Google Search Console queries. They’re longer, more specific, and structured for retrieval — not for a ten-blue-links SERP.

This is keyword research for AI search. It’s a different dataset than what we’ve been working with for twenty years. And it’s available right now, for free, in Bing Webmaster Tools.

The decay problem

One more thing worth flagging. Our citations dropped 97% in two months. December 7 peaked at 5,804 citations in a single day. By February we’re averaging 34.

Content freshness might matter even more for AI citations than it does for traditional ranking. Or maybe competitors published better content. Or maybe Copilot’s retrieval weights shifted. We don’t know yet.

But the speed of the decline is striking. Publish-and-forget was already a bad strategy. For AI visibility, it might be fatal.

So what do we do with this?

Three things, I think.

First, export your grounding queries and use them as a content roadmap. These are pre-validated topics that real users are asking AI about in your space. If your content doesn’t answer those queries well, that’s a gap you can close.

Second, stop thinking about AI visibility as a black box. Between Bing’s AI Performance report, tools like Scrunch for cross-platform AI visibility tracking, and content scoring tools like our AI Grader, we now have three data layers that didn’t exist a year ago. We’re still early, but we’re not guessing anymore.

Third, watch the freshness signal. If our 97% citation decline is content-age-related (and not just our data being weird), then AI search is going to demand a very different publishing cadence than what most SEO teams are used to.

The full analysis with interactive charts is on the Search Influence blog. Verify your own site in Bing Webmaster Tools and pull your data. It takes five minutes.